Define your Performance Testing strategy with Visual Studio

Performance Testing is an essential part of software testing, with the specific goal of determining how a system performs in terms of responsiveness and stability under a particular workload. In this series of posts we’ll define and execute a good strategy for testing performance of an application using Visual Studio.

Software Testing

Software testing is key to success of our applications. Months of work can be in vain if a fully and satisfactory testing of our products is not performed for verifying quality of the deliverables. But what is quality? We can all agree that a quality software responds to some common sense criteria such as:

· Meets set requirements;

· Easy to use;

· Works as intended, i.e. it does what is meant to;

· Is stable (no crashes), consistent and predictable (repeatable actions bring same results – unless unpredictability is in your non-functional requirements!);

· Responds quickly to human interactions; if “quickly” cannot be achieved, replace that with “reasonably”.

Also other technical factors influence quality of software:

· Maintainability and readability of code: how easy is to understand what the source code of the application does, and to update it for fixes or enhancements to the application;

· Cyclomatic complexity and Class coupling: identifies number of “paths” and “references” among objects used in the software application, the higher the more complex is the structure of the application, hence the more prone to issues;

· Robustness, resilience and security: all attributes that protect users from critical errors and crashes, unresponsiveness of the application and data tampering or loss.

Visual Studio

The purpose of this series of posts is to show how to test web applications for performance. We will use Visual Studio 2015 Load Test Manager for automating the tests. Visual Studio obviously integrates perfectly with TFS too, allowing testers to submit identified bugs as work items, provide evidence in the form of textual description, screenshots and video recordings, and assign the work items to the development team for resolution. Application Lifecycle completely managed!

Outcome of the post is in the ability to identify key metrics for software quality, with special focus on execution performance. As we will see later, under the common umbrella of Performance Testing, multiple attributes of a web application, and software in general, can be tested and detailed reports extracted.

Types of Software Testing

Testing your software is important, isn’t it? Of course, but what exactly are we testing?

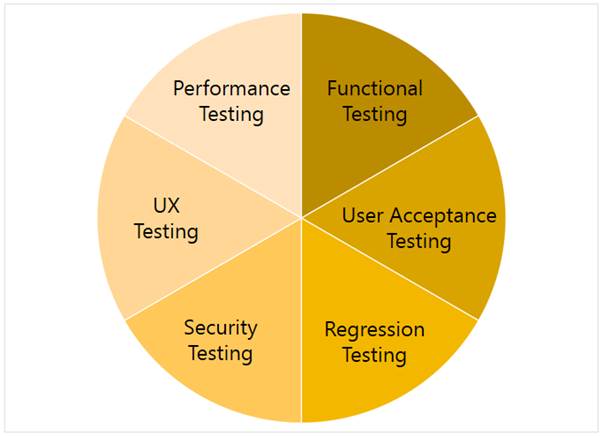

There are different types of testing that you can perform on a software application, whether it is a desktop application, a web application, a mobile app, etc.

Typically, software developers perform Unit Testing as integral part of their coding. This is often accomplished by creating another application (hence, it requires coding again) that tests small portions of the application in isolation. These portions represent the smallest testable part of an application.

If Unit Testing is, at a reason, the first level of testing of an application, there is no specific order to follow for the remaining types of testing, although User Acceptance Testing (UAT) is commonly performed towards the end of the application development lifecycle.

UAT, as a Functional Testing practice in general, aims to verify a program by checking it against design documents or specifications; in other words, we test whether set business requirements are met by the application.

Regression Testing is also performed towards the end of the application lifecycle and, as the name suggests, seeks to uncover new software bugs, or regressions, in existing functional and non-functional areas of a system after changes, such as enhancements, patches or configuration changes, have been introduced.

Security Testing covers aspects of confidentiality, integrity, authentication, availability, authorization and non-repudiation of data handled by a software application. Discussing all of these concepts would probably take a separate session itself, so I leave it to another occasion!

Usability Testing (aka UX for User Experience, or UI for User Interface) is a technique for evaluating efficiency, accuracy and consistency of use of an application by an end-user interacting with the software via its human-oriented interface and devices (screen, keyboard, mouse, camera, etc.).

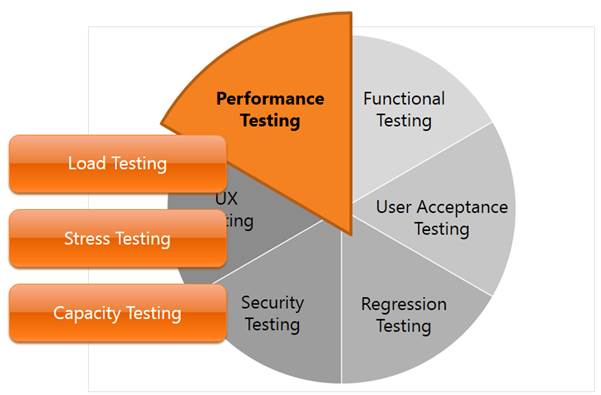

Performance Testing is the focus of this article, and a broad area as well! In general, the aim of Performance Testing is to determine how a system performs in terms of responsiveness and stability under a particular workload. It can also serve to investigate, measure, validate or verify other quality attributes of the system, such as scalability, reliability and resource usage. Under this general umbrella, more specific tests are executed:

· Load Testing is the simplest form of performance testing. A load test is usually conducted to understand the behaviour of the system under a specific expected load. This load can be the expected concurrent number of users on the application performing a specific number of transactions within the set duration. This test will give out the response times of all the important business critical transactions.

· Stress Testing is normally used to understand the upper limits of capacity within the system. This kind of test is done to determine the system’s robustness in terms of extreme load and helps application administrators to determine if the system will perform sufficiently if the current load goes well above the expected maximum.

· Capacity Testing is used to determine how many users and/or transactions a given system will support and still meet performance goals.

Methodology for Performance Testing

There is no successful testing without a proper strategy and execution. Nothing should be left to chance, and a good methodology should be adopted for obtaining the best results.

A very good guidance to performance testing can be found on MSDN: Performance Testing Guidance for Web Applications. Although specific to web applications, the recommendations apply also to other types of applications. The core principles of a performance test plan can be summarised in the following points:

Identify the Test Environment:

· Ideally, it should be an exact replica of the production environment. Oh yes, I forgot to mention: Do NOT test directly on the live environment! Executing performance testing is a stressing activity for your system, and you really do not want to put your live environment under this burden.

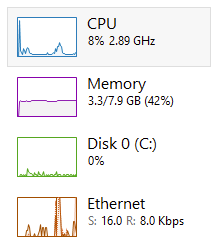

· Monitor hardware, software and network: CPU allocation, memory consumption, disk and Ethernet usage are your minimum aspects to consider. Latency on the network, especially when testing remote services, is an important factor that may produce bad performance results, as a throttled bandwidth may reduce the capacity of the application to fully scale.

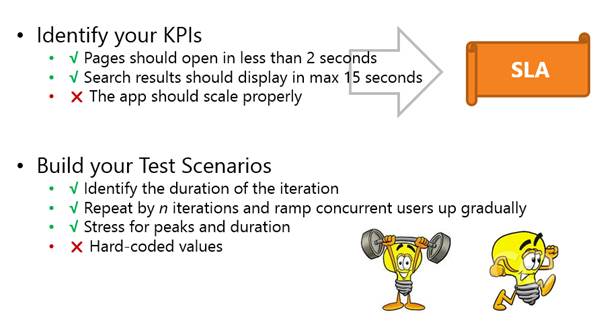

Prepare your Test Scenarios:

· We now enter in the specific of a tool to use for executing performance tests. Irrespective of the tool adopted, the key non-functional requirements to test a software application are: Responsiveness, Scalability and Stability.

· Before executing any test, it is advisable to run a Smoke Test: A smoke test is the initial run of a performance test to see if your application can perform its operations under a normal load. Consider it as a warm-up before the proper exercise. Applications that use JIT compilation or pre-load data in cache before the first execution will benefit enormously of a preparation phase, that otherwise would hinder the performance results.

· Spike Tests, on the converse, are a type of performance test focused on determining or validating performance characteristics of the application when subjected to workload models and load volumes that repeatedly increase beyond anticipated production operations for short periods of time. Spike Tests, in practice, measure the performance of an application under peak intense usage. Testing these conditions will assess the performance boundaries of your application.

Smoke tests?

Create the Baselines:

· Setting the standard for comparison, this is the purpose of creating a baseline. Performance indicators are not absolute metrics; they are relative to an initial condition that is measured in the baseline. Information that your application consumes 10% of CPU is pointless without context: what CPU is it, is 10% good or bad, what are your expectations?

· Baseline results can be articulated by using a broad set of key performance indicators, including response time, processor capacity, memory usage, disk capacity, and network bandwidth.

Analyse the Test Results:

· So you wrote you test scenarios, you executed them and you got some results… so what? Are they good, are they bad, should you worry? The answer is in comparing to baselines: is your app performing better or worse? Is the performance acceptable or not? Define “acceptable”! Do not use vague adjectives, be specific on your expectations. To put it more formally, define you Key Performance Indicators (KPI) in terms of SMART objectives: Specific, Measurable, Attainable, Relevant and Timed. You don’t want to say that a web page should open “quickly”, but rather say that it should open in less than 2 seconds when subjected to a concurrent load of 100 users.

· A collection of all set KPIs represent your Service Level Agreement (SLA) to your stakeholders: do not commit to performance that is not attainable and unrealistic. Measure first, deliver then.

· Understand minimum, maximum, median, percentile, standard deviation: how does your app respond to limit conditions? What’s the average behaviour? What’s the user load that breaks your KPIs? Is your app a weightlifter or a marathon runner?

Performance testing is no rocket science. Analysis of results does not give an immediate solution. The process is rather iterative of testing, tuning, re-testing, re-tuning, and so on, until the best attainable performance. This introduces the need for automation of the execution of performance tests, which is subject of the next part of this post.

Performance testing is no rocket science!

When creating an MVC application with Entity Framework, it is possible to set default values for most properties in a model using the DefaultValue attribute. However, no much flexibility is offered for a DateTime property. This article presents a custom validation attribute for DateTime types that accepts different formats for defining the default value of the property.

How I built a social sharing component for my own web site and added a secured geo-located audit trail. Step by step, let’s analyse technologies and source code for developing this component.

How I built a social sharing component for my own web site and added a secured geo-located audit trail. Step by step, let’s analyse technologies and source code for developing this component.

Build effective SharePoint forms with Nintex that are accessible anywhere, at any time, and on any device. You built the workflows, you built the forms, now make them mobile.

With just over 3 weeks to go to Europe's largest gathering of SharePoint & Office 365 professionals, take a look at these tips that will help you get the most out of ESPC16…

Learn how to write code to perform basic operations with the SharePoint 2013 .NET Framework client-side object model (CSOM), and build an ASP.NET MVC application that retrieves information from a SharePoint server.

What are the synergies and differences of the roles of a Chief Information Officer and a Chief Technology Officer? An open conversation about two roles with one mission…

Whether you are a software developer, tester, administrator or analyst, this article can help you master different types of UI testing of an MVC application, by using Visual Studio for creating coded UI test suites that can be automated for continuous execution.

Different formats and standards exist for describing geographical coordinates in GIS systems and applications. This article explains how to convert between the most used formats, presenting a working library written in C#.

With the release of the Nintex Mobile apps, SharePoint users can now optimise their experience across popular mobile devices and platforms.

Can you generate two identical GUIDs? Would the world end if two GUIDs collide? How long does it take to generate a duplicate GUID and would we be still here when the result is found?

A design paper about implementing GIS-based services for a local Council in Dynamics CRM, structuring address data, and delivering location services in the form of WebAPI endpoints via an Enterprise Service Bus.

All teams are groups but not all groups are teams. What defines a group and what a team? When do we need one over the other one?

Learning to give and receive constructive feedback is an essential part of learning, growing, improving and achieving our goals.

Have you ever wanted to see your iPhone or iPad on a larger screen? Play games, watch movies, demo apps or present to your computer from your iPhone or iPad. Reflector mirrors iOS devices on big screens wirelessly using iOS built-in AirPlay mirroring.

Build workflow applications in SharePoint that can be accessed on mobile devices using the Nintex solution for business process mobilization.

Have you ever desired to have in your code a way to order a sequence of strings in the same way as Windows does for files whose name contains a mix of letters and numbers? Natural string sorting is not natively supported in .NET but can be easily implemented by specialising a string comparer and adding a few extensions to the enumerable string collection.

How can an organisation optimise its sales channels and product targeting by building a 365-degree view of its customers in Dynamics CRM? The answer, and topic of this article, is with the help of Azure IoT and Machine Learning services!

This article presents design best practices and code examples for implementing the Azure Redis Cache and tuning the performance of ASP.NET MVC applications, optimising cache hit ratio and reducing “miss rate” with smart algorithms processed by Machine Learning.

What it takes to be a great Software Development Manager? I have been building software for the last 15 years and have collected a few stories and experiences to share. Some I have used as questions when interviewing candidates. In 11 points, this is my story to date.

Practical code examples of ASP.NET MVC applications that connect to a SharePoint Server and comply with the SOLID principles.

Outsourcing may look financially attractive, but working with companies in far-off lands introduces challenges that, if not considered properly, can drive any project to failure. Let’s explore some common pitfalls when working with offshore partners and a common-sense approach to work around them.

Customers expect a modern approach to advertising. Digital advertising can leverage evolving technology to provide just-in-time, just-at-the-right-place promotions.

There is an urban myth in the programmers’ community that the so called “Yoda’s syntax” performs better when checking an object for nullity. Let's demystify it...